With the rapid evolution of the risk landscape in Europe and beyond, civil protection authorities are increasingly required to manage emergencies that unfold faster, affect wider areas, and demand more coordinated, data-driven responses.

In this context, artificial intelligence (AI) is being tested and applied to support disaster risk management. Far from being a futuristic concept, AI is already helping to detect fires from space, improve training for emergency responders, and even support robots during search-and-rescue missions. These tools can help teams act faster, see risks more clearly, and make better-informed decisions.

For civil protection services, this technological shift offers major opportunities to strengthen situational awareness, optimise resource allocation, and enhance the safety of first responders. Yet it also raises important questions about data reliability, ethical use, interoperability, and the readiness of organisations to integrate complex digital tools into operational practice.

These themes will be explored further at the Global Initiative on Resilience to Natural Hazards Through AI Solutions workshop, taking place on 11–12 December in Brussels. The event will bring together civil protection authorities, experts, researchers, and policymakers to discuss how AI can support preparedness and operational decision-making, and what conditions are needed for its responsible and effective use.

AI across the disaster management cycle

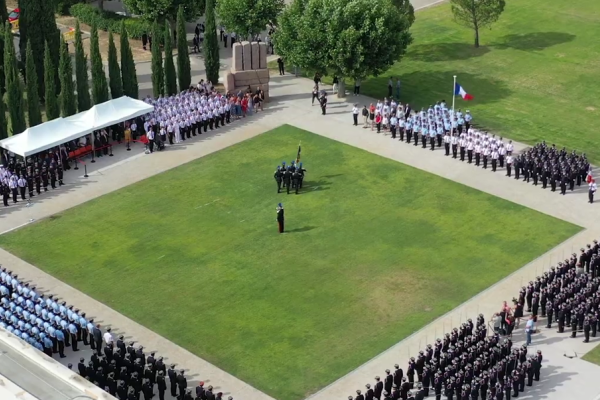

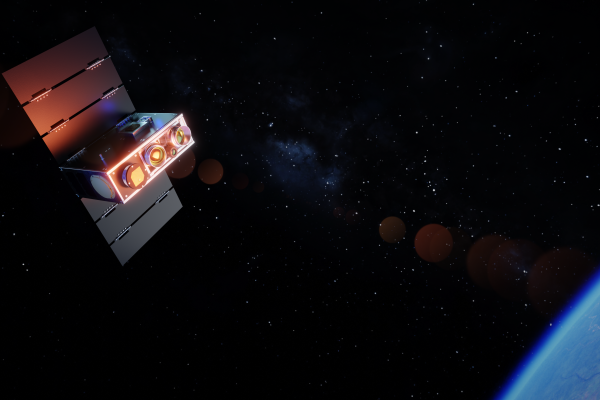

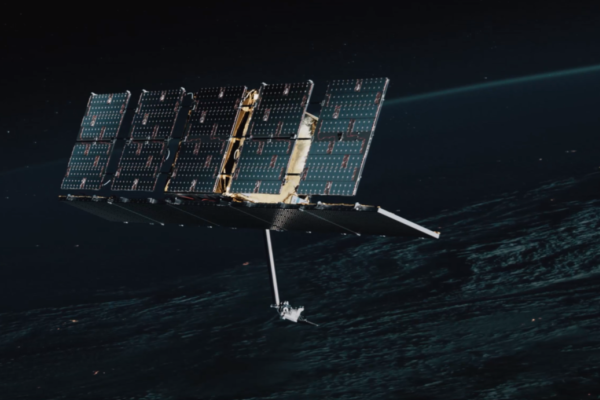

Artificial intelligence is now being explored across multiple stages of disaster risk management. In space-based monitoring, AI-enhanced satellite imagery is helping detect hazards faster and assess their impact even under limited visibility, as demonstrated by companies such as OroraTech and ICEYE. In the field, projects like SYNERGISE and CARMA are testing how AI-enabled robotics can support decision-making and assist first responders in complex and hazardous environments. And as these technologies evolve, training institutions such as the École Nationale Supérieure des Officiers de Sapeurs-Pompiers (ENSOSP) are examining how AI can strengthen learning, preparedness, and operational coordination within fire and rescue services.

The European Union is supporting this shift through its Preparedness Union Strategy, promoting innovation and the practical use of AI in civil protection. The following stories highlight EU-funded projects and private organisations that presented their work during the AI in Disaster Risk Management Workshop held in Brussels in June 2025. While public–private cooperation in this field is still developing, the workshop underlined the wider potential of future collaboration in advancing AI for civil protection. To better understand how this landscape is evolving, we spoke with project teams from OroraTech, ICEYE, SYNERGISE, CARMA, and ENSOSP. Their insights illustrate how AI is already being applied across different parts of the disaster management cycle, as well as the practical and ethical challenges that emerge as these systems move closer to real-world use.

CARMA: Human–robot symbiosis in disaster response

SYNERGISE: Enhancing operational safety through autonomous systems

ENSOSP: Integrating AI into firefighter training and crisis management

OroraTech: Detecting and managing wildfires from space

ICEYE: AI-enabled flood intelligence from space

As the use of AI expands across civil protection, it also brings a set of practical and ethical challenges that must be addressed for these tools to function reliably in real emergencies. Questions around trust, explainability, data integration, and procurement are now central to discussions taking place across Europe. The following section outlines some of the main issues shaping this debate and what they mean for the responsible adoption of AI in disaster risk management.

Limits and lessons: challenges in bringing AI into practice

Despite clear progress, bringing artificial intelligence into real-world disaster management remains a complex task. AI systems can extend human capability, but their adoption depends on trust, transparency, reliable data, and close alignment with operational needs. The following issues are central to ongoing discussions across Europe.

Trust, explainability & human oversight: In life-saving situations, decisions based on opaque algorithms raise serious concerns. Civil protection authorities must be able to understand and explain the recommendations made by AI systems, especially when they guide real-time operations. “Socially and ethically, there are concerns about trust, transparency, and the acceptance of robots in roles traditionally filled by humans, particularly in sensitive situations involving victims.”— Alexandre Ahmad, CARMA

Training institutions such as ENSOSP stress that explainable AI (XAI) is as much about human confidence as technical design. If AI outputs are difficult to interpret, they risk adding to rather than reducing responders’ workload. “AI can support human decision-making, but it cannot replace professional judgement.” — Quentin Brot, ENSOSP

CARMA’s experience reinforces this lesson: human–robot collaboration depends on transparency and predictability. When users can understand how a system reacts ,and why, they are far more likely to trust and adopt it in high-risk environments. “Trust will come through transparency — responders must understand not just what an AI tool tells them, but why.”— Dr Nicholas Vretos, CARMA

Data, integration & interoperability: A major challenge for AI in disaster management is how data from different sources — satellites, drones, sensors, and field reports — is gathered and shared. Effective use of AI depends on clean, harmonised, and interoperable datasets, but information is often fragmented or collected under pressure.

Projects such as OroraTech, ICEYE, and SYNERGISE highlight the difficulty of combining these inputs into one operational picture. “AI can enhance response capacity — but when communications fail or systems don’t connect, its advantages quickly diminish.” — Dr Sabina Ziemian, SYNERGISE

The need for standardised data protocols and shared interfaces is now widely recognised across Europe’s civil protection community. Without them, even the most advanced AI tools risk staying confined to pilot projects rather than becoming part of daily operations.

From prototype to procurement: Many AI tools in disaster management remain at the pilot stage — tested successfully but not yet part of routine operations. Procurement rules, certification processes, and short funding cycles often slow progress.

Developers and responders agree that innovation needs to move faster and stay close to user needs. “It takes time to build confidence in new technology. Users need proven accuracy, reliable monitoring, low false-positive rates, and transparent data before committing to large-scale use.” — Julia Gottfriedsen, OroraTech

Bringing AI from prototype to practice will require closer collaboration between researchers, policymakers, and practitioners, ensuring that new tools are practical, trusted, and ready for real-world use.

Artificial intelligence in disaster risk management is no longer a theoretical prospect — it is already being tested and applied across Europe, from space-based monitoring to field robotics and advanced training environments. Yet the success of these innovations depends not only on algorithms, but on trust, training, and transparency.

The projects featured here — from OroraTech and ICEYE to SYNERGISE, CARMA, and ENSOSP — demonstrate that AI, when responsibly designed and deployed, can help build a more resilient and responsive Europe. Their work shows that progress is as much about people as it is about technology.

Artificial intelligence is changing how we anticipate, assess, and respond to risks — but its value depends on how well humans remain at the centre of these systems.

This conversation will continue at the Global Initiative on Resilience to Natural Hazards Through AI Solutions workshop in Brussels on 11–12 December.